This is The Internet Report, where we analyze outages and trends across the Internet through the lens of ThousandEyes Internet and Cloud Intelligence. I’ll be here every other week, sharing the latest outage numbers and highlighting a few interesting outages. As always, you can read the full analysis below or listen to the podcast for firsthand commentary.

Internet Outages & Trends

Outages connected to configuration mishaps were a common theme last year, and we’ve continued to see incidents like these in 2025. As teams look to deploy features, these changes sometimes cause unexpected disruptions—particularly in distributed architectures, where applications consist of various components that may operate on a mix of in-house and third-party infrastructure. This makes it easy for a team focused on one component to make a change without recognizing the potential impact it could have on another component.

The start of a new year often leads to numerous configuration-related incidents as teams begin implementing new code releases and modifications following the holiday break. This year has been no exception, with configuration changes triggering consecutive outages at Asana in early February. Additionally, configuration-related issues appear to have possibly contributed to recent disruptions at Barclays, ChatGPT, Jira, and Discord.

Read on to learn more, or use the links below to jump to the sections that most interest you:

Asana Outages

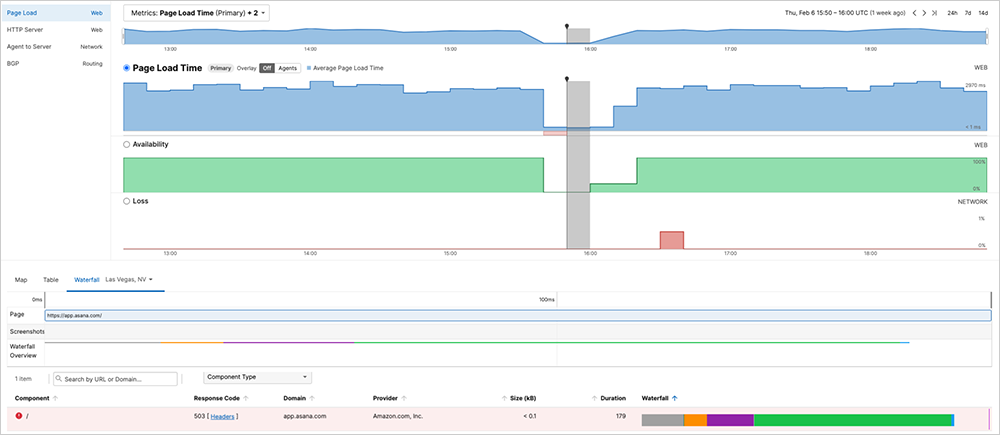

On February 5 and 6, Asana, the cloud-based work management software platform, experienced similar back-to-back outages, both reportedly caused by configuration changes. During the February 5 outage, U.S. Asana users couldn’t log in or access the service. Asana reported experiencing a high level of errors during the outage and in its post-incident report revealed that “a configuration change caused a large increase in server logs, overloading logging infrastructure and causing server restarts.” This led to cascade failure as other servers also overloaded.

During the outage, ThousandEyes observed several factors consistent with a backend issue caused by a configuration change. The service frontend was accessible, with no significant network conditions coinciding with the outage, indicating backend system problems rather than network connectivity issues.

ThousandEyes also observed a reduction in page load times. While this could initially be interpreted as a sign of improved performance, it was coupled with a reduction in the components that make up the page. This indicates that although connectivity to the front-end service was available, attempts to retrieve or load the services revealed issues in loading all required components necessary for the service to function normally.

Once Asana rolled back the configuration change that had caused issues, the application appeared to return to its normal functioning state.

However, a day later, Asana experienced a second outage with similar characteristics that the company also attributed to a configuration change. During the outage, users across the globe couldn’t use Asana, with the app, API, and mobile all impacted. Asana reported that a configuration change crashed some of its servers, causing 20 minutes of complete downtime. The company rolled back the configuration, but noted that “this event triggered a cascade failure in network routing components.”

ThousandEyes observations confirm Asana's reports regarding the recent outage. During this incident, we observed a number of HTTP 502 Bad Gateway errors. Such errors typically indicate that a server received an invalid response from an upstream system, suggesting that the root cause may be related to a backend service. This could point to potential misconfigurations, server overloads, or communication failures between services.

As the outage continued, the server began returning the HTTP status code 503, which indicates that it could not process incoming requests, possibly due to being overwhelmed with traffic or undergoing temporary maintenance. These indications of potential maintenance may map to Asana’s efforts to resolve the outage.

The presence of server-side status codes suggests that the requests were reaching the frontend of the Asana service, indicating that the network connection was likely not the cause of the outage. ThousandEyes’ observations confirmed this, as the page continued to load, albeit with a reduced component count. The fact that the issue appeared to be localized in the U.S. and that there were no significant network outages during this time further supported the idea that the problem stemmed from a backend service.

After Asana rolled back the configuration change, ThousandEyes observed that the system returned to normal functioning.

Since both Asana outages stemmed from configuration changes and occurred in such quick succession, they may be related to the same maintenance or product updates. While we can’t state this definitely, these types of outages often come in pairs as companies reattempt the same change they’d tried before, with additional safeguards in place.

After the second outage, Asana reported that they have implemented new processes to avoid issues like this going forward, adding a validation step and updating networking components to guard against this type of cascade failure. Additionally, the company is transitioning to staged configuration rollouts so that they can identify similar issues without causing downtime for customers.

As ITOps teams deal with services and systems composed of many seemingly disparate and distributed components working together to deliver a service, any change—be it a configuration adjustment, an upgrade, or a fault—in any component or service within the complete service delivery chain can have a devastating impact on the service’s overall functional performance. Given the increasing complexity and interaction among these components, it’s impossible to be aware of every possible scenario. Additionally, it may not always be practical to inform every stakeholder about an impending change—and it can be difficult to identify everyone a change might impact. However, by maintaining a holistic perspective of the entire service delivery chain, including all components and dependencies, if unexpected problems arise, you can quickly ascertain the problem or fault domain and take mitigation steps, which may include rolling back configurations, among other actions.

Barclays Outage

On January 31, the major U.K.-based bank Barclays experienced an outage that may also have been connected to service updates. During the incident, customers began experiencing intermittent errors with payments and transfers. Thousands of individuals and businesses couldn’t transfer money or obtain their balances on the Barclaycard app, Barclaycard Online Servicing, the Barclays app, online banking, and telephone banking.

When diagnosing an outage, it's just as important to understand what the issue isn’t, as well as what it is. During the disruption, ThousandEyes did not observe any significant network conditions affecting connectivity to Barclays' customer-facing services. This indicates that while the service appeared to be reachable, network connectivity was not the issue; the problem lay within Barclays' backend systems.

Additionally, there were no problems in reaching the frontend, including those involving third-party services and cloud providers. The disruption seemed specific to Barclays and did not appear to affect other financial institutions, suggesting that the issue was unlikely to reside in a common third-party service or system.

ChatGPT Disruption

On February 5, OpenAI's ChatGPT experienced a disruption that appeared to primarily affect developers and businesses that had integrated ChatGPT into their applications. Although OpenAI has not officially confirmed the incident’s cause at the time of writing, the nature of the disruption indicated issues likely related to backend services. Users appeared to be able to access the frontend of the platform, but they encountered challenges when attempting to use various functions and features, implying that the root of the problems lay within the backend infrastructure, which is responsible for processing user requests and managing data.

This event occurred shortly after OpenAI introduced a new deep research capability in ChatGPT and launched an education-specific version for over 500,000 students and faculty in the California State University system.

During the disruption, ThousandEyes observed that the time taken for certain backend calls increased. There were longer processing times and reduced performance for some API calls to backend services. In fact, in some instances, the response times were nearly twice as long compared to the performance observed prior to the disruption.

Jira Outage

Starting around 10:44 AM (UTC) on February 5, Jira, a product from Atlassian used by developers and others, experienced an outage that rendered the platform slow or inaccessible for some users. Intermittent errors affected various services, including content viewing, creating and editing, authentication and user management, search, notifications, administration, the marketplace, mobile functionality, purchasing and licensing, signup, and automation.

During the outage, the services appeared reachable, with no significant network issues observed, indicating that the problem likely resided in the backend. Within about 25 minutes, Atlassian announced that they had identified the root cause and mitigated the problem, though they noted that intermittent errors persisted into the following day.

This outage followed a similar incident on February 3. Again, indications pointed to a backend services issue rather than network accessibility.

While no direct correlation has been established and we can’t say for sure whether this issue was configuration-related, it is common for issues like these to come in waves. Following a mitigation or rollback, subsequent attempts to implement an internal change or patch can often lead to similar issues cropping up.

Discord Outage

On February 4, Discord—the communications and social media platform—experienced an issue that affected users' ability to send and receive messages, although they could still connect to the platform. This indicated that the problem likely originated from backend services related to messaging rather than from connectivity or authentication issues.

Discord did not confirm the cause of the disruption but acknowledged that the issue was within its systems and confirmed that a fix had been implemented. This was consistent with observations from ThousandEyes, which indicated that during the outage, connectivity from multiple regions to Discord’s front door showed no significant network conditions that would indicate the issue was network-related. The fact that the service was reachable network-wise from multiple regions simultaneously, as well as from different regional front-end servers, further points toward a common aggregating factor being a service or system within Discord's backend.

By the Numbers

Let’s close by taking a look at some of the global trends ThousandEyes observed over recent weeks (January 27 - February 16) across ISPs, cloud service provider networks, collaboration app networks, and edge networks.

-

After an initial drop, the total number of global outages trended upward throughout the observed period. In the first week (January 27 - February 2), ThousandEyes noted a 16% decrease, with outages dropping from 395 to 331. However, the following week (February 3-9) saw a reversal in this trend, with the number of outages increasing from 331 to 353, marking a 7% rise compared to the previous week. The upward trend continued into the next week (February 10-16), with observed outages rising from 353 to 398, a 13% increase.

-

In contrast, the pattern of outages observed in the United States differed significantly. Initially, outages decreased from 195 to 188, representing a 4% drop compared to the previous week. However, during the second week (February 3-9), outages surged by 12%. This was followed by a 7% decrease from February 10-16, during which the number of outages fell from 210 to 196.

-

From January 27 to February 16, an average of 55% of all network outages occurred in the United States, an increase from the 49% reported during the previous period (January 13 to 26). This 55% figure continues a trend observed throughout 2024, where U.S.-centric outages accounted for at least 40% of all recorded outages. And it appears a new trend may be forming this year: On average, U.S.-centric outages have made up more than 50% of all recorded outages in 2025 so far.