This is The Internet Report, where we analyze outages and trends across the Internet through the lens of ThousandEyes Internet and Cloud Intelligence. I’ll be here every other week, sharing the latest outage numbers and highlighting a few interesting outages. This week, we’re also exploring the fascinating world of event IT with special guest Dominic Hampton, Managing Director of attend2IT. We’ll discuss what it takes to deliver successful digital experiences at major events like concerts and conferences—and takeaways ITOps teams at enterprise companies can apply to their own events and day-to-day operations. As always, you can read the full analysis below or listen to the podcast for firsthand commentary.

Internet Outages & Trends

What does it take to transform an empty field into a vibrant temporary event venue—with all the connectivity and IT in place to deliver amazing experiences for attendees? And when the concert or festival begins, how can you assure everything goes smoothly? This week, we’re diving into just that, exploring best practices from the event IT space and relevant takeaways for all ITOps teams.

We’ll also cover insights from recent disruptions, as network misconfiguration, authentication, and change management practices appeared to cause customer-facing problems.

Read on to learn more, or use the links below to jump to the sections that most interest you:

Behind the Scenes of Event IT: Lessons for Enterprise ITOps

When it comes to connectivity for events, what looks like it should be a repeatable setup and process, in many cases is not. Cost, time, user needs, and other factors influence how a major event such as a music festival is networked—whether with satellite, cellular, point-to-point, or fixed-line connections.

The individual characteristics of an event significantly influence its networking and the configuration of that network. For instance, at some large events, attendees arrive at staggered intervals over several hours, while at others, most attendees arrive at the same time. Each situation may require a different networking approach. IT teams must also make crucial decisions regarding redundancy and backup: Which networked services—such as ticket scanning, security controls, CCTV, or services for attendees—must remain consistently operational and prioritized? Additionally, which services can tolerate some latency or degradation without causing major disruptions?

The best practices for assuring digital experiences at events highlight helpful reminders for enterprise ITOps teams as well:

- Design With Purpose: Whether designing a network for a concert or a SaaS company, ITOps teams need to build it to support the unique characteristics of the workload it underpins. This involves a deep understanding of your users and your end-to-end service delivery chain, including any third-party providers you rely on.

- Visibility Matters: In dynamic environments like large music festivals, with temporary networks and numerous users interacting in a confined space, things can easily go wrong. It’s crucial to quickly detect and respond to potential issues to ensure that services remain accessible and that problems don’t affect the attendee experience. The same principle is equally relevant in more permanent corporate environments. ITOps teams need comprehensive visibility across their end-to-end service delivery chain to efficiently identify and mitigate issues—and proactively optimize their services to deliver better and better customer experiences.

Listen to the podcast for more event IT insights from Mike and special guest Dominic Hampton.

Microsoft Azure Incident

On January 8, users of a range of Azure-based services—including Databricks, OpenAI, and other apps and databases—experienced connectivity issues, timeouts, connection drops, and other problems for many users across an approximately six-hour window.

The root cause was a networking configuration change in a single availability zone in East US 2, made “during an operation to enable a feature,” according to Azure's post-incident report.

Important internal indexing data was lost due to an incorrectly executed manual process—performed parallelized rather than sequentially. This error impacted “network resource provisioning for virtual machines in East US 2, Az01,” manifesting as “a loss of connectivity affecting VMs that customers use, in addition to the VMs that underpin [Azure’s own] PaaS service offerings.”

The incident lasted several hours due to the intensive nature of recovery and restoration from this type of issue, high CPU usage caused by the incident, and “unforeseen challenges” encountered during rebuilding work. However, Microsoft said it expedited some recovery aspects by tackling multiple builds simultaneously. The company is also working on improvements to a recovery mechanism that could potentially reduce future recovery times to under two hours.

Additionally, as the issue was triggered by “certain operations [being] performed incorrectly,” Microsoft will only allow a set of “designated experts” to perform these operations going forward.

Microsoft also acknowledged that they sent "inappropriate" communication to customers during the outage, suggesting that customers take certain business continuity actions without providing the full context of the incident. While Microsoft plans to adjust its future customer communication, this event underscores why it’s critical for organizations to have independent, end-to-end visibility of their environment. With independent data, customers would likely have recognized conflicting advice and responded appropriately to their specific situations.

Microsoft 365 Disruption

On January 13, Microsoft 365 users were unable to access some apps due to an issue with multi-factor authentication. The disruption also reportedly impacted MFA registration and reset processes.

Systems administrators in Norway, Spain, the Netherlands, and the United Kingdom all reported sign-on difficulties. Microsoft also later clarified that those affected were primarily located in Western Europe.

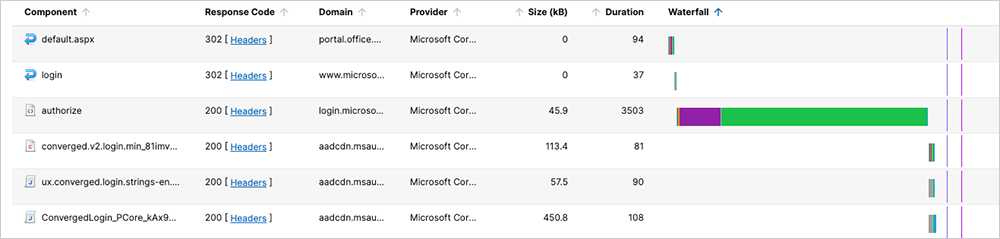

The issue manifested as excessive wait times for systems attempting to authenticate, although there appeared to be no network-related problems.

In the initial response to the service disruption, Microsoft promptly redirected user traffic to alternate, fully operational infrastructure. This strategic move enabled the company to mitigate the immediate impact on users and restore service availability while conducting a thorough investigation into the root cause of the issue. After analyzing the involved systems, Microsoft fully resolved the problems approximately four hours later, allowing normal operations to resume and likely ensuring that users experienced minimal downtime.

Atlassian Bitbucket Cloud Outage

On January 21 at 3:30 PM (UTC), Atlassian’s Bitbucket Cloud was impacted by a database issue that affected the website, APIs, and Pipelines services. While the incident was detected internally “within eight minutes,” it took “about three hours and 47 minutes” to fully recover services.

The problems were attributed to database contention occurring on high-traffic tables, leading to saturation of the Bitbucket database. A saturated database can arise from multiple underlying issues, often indicative of scaling challenges. In Atlassian's case, the issue was caused by increased API traffic triggering write contention on a high-traffic table, resulting in increased CPU usage and degraded database performance. This ultimately impacted core services (web, API, and Pipelines) availability.

When building redundancy options, it’s important to consider the risks of relying on a single instance for code management. Such dependence can lead to various operational challenges for companies. It creates limitations, including difficulties in efficiently rolling back changes, problems with deploying new builds, and a lack of alternative or backup systems to prevent service degradation in the future.

Additionally, Atlassian has also taken a number of lessons from the incident, pledging “additional maintenance on core database tables,” initiating throttling on write-heavy operations, and improvements in “database observability to isolate failures.”

TikTok’s Shutdown

U.S. TikTok users were recently unable to access the social media platform after it shut down to comply with a law (and Supreme Court decision) that banned the app in the United States. The approach TikTok used to take their platform offline offers a case study of how to turn off a global app for a subset of users in a specific geography.

ThousandEyes observed the initial impacts of the shutdown around 3:30 AM (UTC) on January 19, as access to www.tiktok.com appeared to become restricted, with decreased page load times pointing to a reduction in the number of page components, which meant that pages still loaded but likely without their full content and users received error messages.

Following the partial page loading, requests were redirected to a new U.S.-based landing page. This page seemed to incorporate advanced geoblocking features, which restricted access to content based on the user's geographic location.

Around 5:15 AM (UTC), the DNS entry appeared to be removed, making U.S. TikTok services unreachable.

At approximately 2:35 PM (UTC), the service appeared to come back online. Initially, the domain name started to resolve again, redirecting to the U.S. landing page. By 4:10 PM (UTC), the redirection and page components returned to their pre-shutdown state, and the service seemed to operate normally once more.

Explore TikTok’s shutdown further on the ThousandEyes platform (no login required).

TikTok’s actions serve as a case study on how to take down a service. It’s important to note that this type of technical takedown—removing a site from the Internet in a specific geographical area by making its services unreachable through network operations—only works effectively if the service owner initiates it. While external parties can take various actions to impact service availability, the takedown would likely not be as clean and complete.

DeepSeek Incidents

As AI service and model builder DeepSeek rode a wave of excitement in late January on the back of a new model release, it experienced two service unavailable incidents on January 26 and two more such incidents the following day, alongside a “cannot login/signup” issue. Also on January 27, DeepSeek started to experience a degraded performance issue, and on January 28, the company said it had been impacted by “large-scale malicious attacks” aimed at its infrastructure.

It’s possible that an influx of traffic caused the earlier disruptions after the release of DeepSeek’s new model. Businesses can often experience performance issues during high-load events, such as the launch of a new model or product. While a service’s architecture may be designed for scalability, it may not be specifically tailored to handle the volume of simultaneous requests triggered by spikes in publicity or external events, as we discussed in a previous Internet Report episode.

What can companies do to guard against such disruptions during high-traffic times? As noted, the application is typically built for scale and operates within a distributed architecture, leveraging CDNs. However, there can still be bottlenecks. For example, the ability to process multiple concurrent registrations and the increased frequency of requests for APIs, along with general queries and interactions, may lead to challenges. To address these issues, mitigation and throttling techniques can be implemented to maintain a smooth flow of requests, albeit at a reduced rate.

It’s also worth noting that public AI services also often see high demand in general. ChatGPT hit capacity limits in its early days—leaving users unable to access the tool. It has also had outages, just like any public-facing web-based service, including a series of disruptions in late 2024.

By the Numbers

Let’s close by taking a look at some of the global trends ThousandEyes observed over recent weeks (January 13-26) across ISPs, cloud service provider networks, collaboration app networks, and edge networks.

- The total number of global outages trended up throughout the observed period. In the first week (January 13-19), ThousandEyes reported an 11% increase in outages, rising from 296 to 328. This upward trend continued into the following week (January 20-26), with the number of outages increasing from 328 to 395, marking a 20% rise compared to the previous week.

- This pattern was clearly reflected in the outages observed in the United States. Outages significantly increased during the first week (January 13-19), rising 34%. This was followed by a 24% increase from January 20-26, with U.S. outage numbers rising from 157 to 195.

- From January 13-26, an average of 49% of all network outages occurred in the United States, up from the 44% reported during the previous period (December 30 - January 12). This latest 49% figure represents a notable rise compared to the start of 2024, when 44% of outages were U.S.-centric, and a significant increase from the corresponding period in 2023, when only 23% of outages were U.S.-centric.